Interpreting Object Detection using $B\text{-}\cos$

This project explores the application of the B-cos method for inherently interpretable deep learning models, extending its use to object detection tasks using YOLO.

Overview

This project investigates the B-cos method, an inherently interpretable deep learning approach, and extends its application to object detection tasks. Initially proposed for Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs), B-cos replaces traditional linear transformations with cosine similarity-based operations to enhance model interpretability.

$B\text{-}\cos$ introduces a novel layer that computes the cosine similarity between the input and weight vectors, generating contribution maps that highlight the most relevant features for classification. This method offers a trade-off between performance and interpretability, making it suitable for applications requiring model transparency and explanation.

The B-cos transformation is defined as:

\[\text{B-cos}(\mathbf{x}; \mathbf{w}) = \|\mathbf{x}\| \cdot \left| \cos\left( \angle (\mathbf{x}, \mathbf{w}) \right) \right|^{B} \cdot \text{sgn}\left( \cos\left( \angle (\mathbf{x}, \mathbf{w}) \right) \right)\]Here:

- $ \mathbf{x} $ is the input vector.

- $ \mathbf{w} $ is the weight vector.

- $ |\mathbf{x}| $ denotes the norm (magnitude) of $ \mathbf{x} $.

- $ \cos\left( \angle (\mathbf{x}, \mathbf{w}) \right) $ represents the cosine of the angle between $ \mathbf{x} $ and $ \mathbf{w} $.

- $ B $ is a hyperparameter that controls the degree of alignment emphasis.

- $ \text{sgn} $ is the sign function, indicating the sign of the cosine value.

This transformation encourages the alignment between the input and weight vectors, promoting interpretability in deep neural networks. (CVF Open Access)

We reproduced key experiments from the original paper, “B-cos Alignment for Inherently Interpretable CNNs and Vision Transformers”, using ResNet18 on the CIFAR-10 dataset. Furthermore, we extended the method to object detection by adapting it to YOLO and evaluating its performance on the Fruit Dataset. Key contributions include:

- Reproducing and validating the results of the original paper.

- Analyzing the architectural design decisions, such as the absence of ReLU and normalization layers.

- Implementing and demonstrating the applicability of the B-cos method in object detection tasks.

A GitHub repository with the complete codebase is available here.

More details can be found in the project report below.

Methodology

Reproducing B-cos on ResNet18

We incorporated B-cos layers into ResNet18 for image classification on CIFAR-10, replacing standard convolutional layers. The experiments focused on:

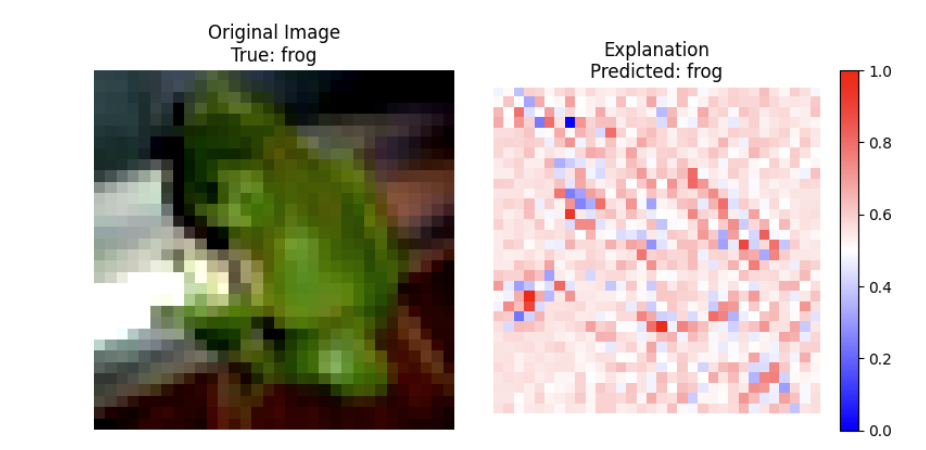

- Validating interpretability improvements through contribution maps.

- Exploring the impact of key hyperparameters, including the non-linearity parameter B and the addition of bias or activation functions.

Extending B-cos to YOLO

To extend B-cos for object detection, we replaced all convolutional layers in YOLO with B-cos transformations. This allowed YOLO to generate localized, interpretable contribution maps while preserving detection performance.

Results

Image Classification on CIFAR-10

Using B-cos in ResNet18 achieved 84.17% accuracy, trading off ~3% performance for enhanced interpretability. Fine-tuning the B parameter showed improved accuracy and more focused contribution maps with higher values.

Object Detection with YOLO

B-cos-enabled YOLO maintained competitive performance while generating interpretable explanation maps for object detection tasks. Figures demonstrate the localized maps for detecting objects like bananas and oranges, showcasing the method’s broader applicability.